Proof-of-Concept Door Opening

Finally, I got a working proof of concept of autonomous door opening. This is the first step to enable ARIPS navigate in my appartement even if a room door is closed.

Hardware overview

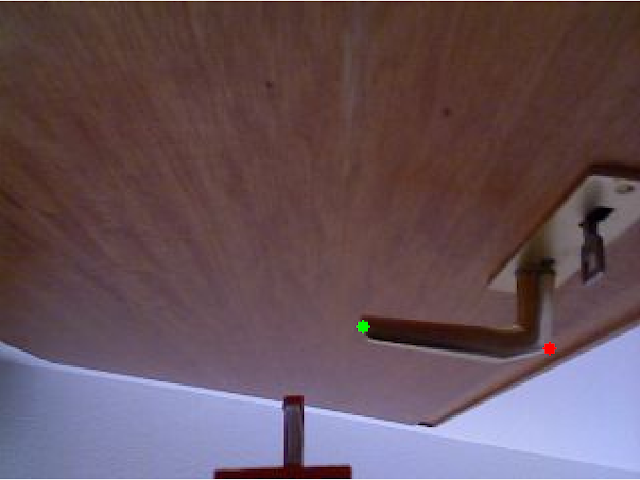

To open a door, a 3D printed door handle gripper has been designed. It has a linear actuator driven by a Feetech SCS009 servo. To amplify the pulling force, the servo first drives a worm gear, than the gripper. Since a full movement of the gripper needs multiple servo turns, I took the positional potentiometer from inside the servo and mounted it on the inner gear. The gripper is mounted on an additional rig at door handle height. The kinect is pointed upwards to detect the door handle position.

|

|

|---|---|

|

|

Software

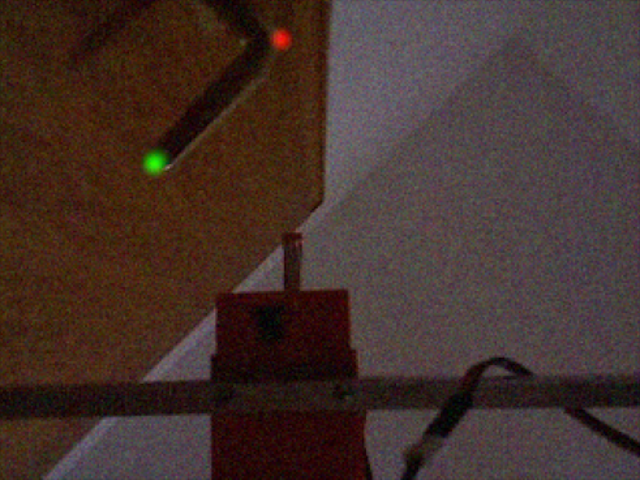

The robot needs to drive right below the door handle to successfully grab it. To know where the door handle is, a door handle detection has been implemented using the RGB image of the kinect. 3D depth data is unfortunately unavailable since the distance to the kinect is too low. For the detection, a U-Net-inspired convolutional neural network has been trained. The networks outputs two heatmaps per RGB image, one for each side of the door handle. The final position is the location of the maximum value of the heatmap.

The training data was collected manually and annotated using a custom python tool. Currently it consists of about 1200 door handle images plus 300 images without a door handle. For training, the images are augmented with random brightness and contrast adjustments and gaussian noise.

|

|

|---|---|

| Kinect’s view of the door handle from below. The door handle start and and points have been annotated. | Detection result of a door handle. The input image was augmented by random brightness/contrast adjustment and Gaussian noise |